Team Foundation Server: Server-side Validation & Interception

Some time ago I presented a simple way to version TFS Web Services in order to intercept and perform server side validations.

In this post I will introduce another way of doing so.

Check out the (poor documented) ITeamFoundationRequestFilter interface in MSDN. This interface is part of an extensibility method called TFS Filters.

Using this interface as a regular TFS plug-in (meaning you deploy it in the plugins directory as you do with subscribers) you can inspect method executions, requests, etc.

It is very useful when you need to create an audit log, measure performance (recording execution time or calls), diagnose connection problems, etc. In fact, when you connect to TFS from a version of Visual Studio lower than 2010, an implementation of this filter (check the Microsoft.TeamFoundation.ApplicationTier.PlugIns.Core.UserAgentCheckingFilter) throws an exception indicating that you need a patch for doing so.

The latter is the functionality I am personally more interested in: validations.

The interface defines the following methods in order to handle a request life cycle (in chronological order):

- BeginRequest: called when a new TFS request (ASP.NET) is about to be executed. At this phase, you do not know which operation is about to be executed (at least not directly).

- RequestReady: called when security and message validations has already happened (only at Web Service level). At this phase, you do not know which operation is about to be executed (at least not directly).

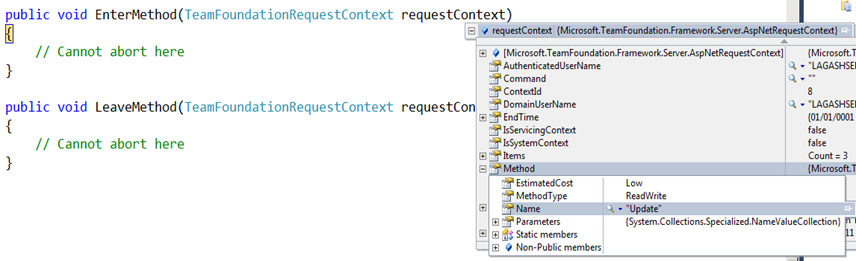

- EnterMethod: called when a logical TFS method is about to be called. At this phase, you now know which operation TFS is about to execute (and you even have its parameters).

- LeaveMethod: called when a logical TFS method has already been executed.

- EndRequest: called when ASP.NET request is about to end.

Sample debugging session (method information available when EnterMethod is executed):

Turns out that you can only abort the execution of the current request in the BeginRequest and RequestReady methods:

- The only way of doing so, is throwing an exception that inherits from Microsoft.TeamFoundation.Framework.Server.RequestFilterException.

- At this phase (BeginRequest or RequestReady), you do not know yet which Method is being called by the client (at least not directly).

- Even if you throw exceptions from the EnterMethod method, you wouldn’t abort the current execution (there are some nice try-catch internal code preventing the error from aborting the execution). The exception will only be logged.

Note: As in my previous post, the next code sample is only meant to be an experiment in any case :).

By doing some ASP.NET HttRequest manipulation thought, you can implement a validation filter in the RequestReady or BeginRequest methods.

- Get the current ASP.NET HttpContext.Request

- Read the entire InputStream and then restore its original state (assuming it is not a forward only stream).

- Deserialize the SOAP Envelope and reading the parameters from there.

So… as I already made it clear, consider other alternatives before using this as production code.

{

public void BeginRequest(TeamFoundationRequestContext requestContext)

{

// Can abort here

TfsPackageValidator.ValidatePackage();

}

public void RequestReady(TeamFoundationRequestContext requestContext)

{

// Can abort here

}

public void EndRequest(TeamFoundationRequestContext requestContext)

{

}

public void EnterMethod(TeamFoundationRequestContext requestContext)

{

// Cannot abort here

}

public void LeaveMethod(TeamFoundationRequestContext requestContext)

{

// Cannot abort here

}

}

The above sample code validates inputs in the BeginRequest method (you can always move it to the RequestReady). The TfsPackageValidator class inspects the current ASP.NET request and deserializes the input parameters. If it finds an Update operation over a Work Item (by looking at the SOAP Message Body), it continues with the validation process. What we are looking for here, is the Package element with the update information about the work item.

And here is my TfsPackageValidator class:

{

public static void ValidatePackage()

{

var soapXml = ReadHttpContextInputStream();

var packageElement = ReadUpdatePackageFromSoapEnvelope(soapXml);

if (packageElement != null)

ValidatePackage(packageElement);

}

static void ValidatePackage(System.Xml.Linq.XElement package)

{

var updateWorkItemElement =

package.Descendants("UpdateWorkItem").FirstOrDefault();

if (updateWorkItemElement != null)

{

var priorityColumnElement =

updateWorkItemElement.Descendants("Column").Where(

c => (string)c.Attribute("Column") == "Microsoft.VSTS.Common.Priority").FirstOrDefault();

if (priorityColumnElement != null)

{

int priority = 0;

var priorityText = priorityColumnElement.Descendants("Value").FirstOrDefault().Value;

if (!string.IsNullOrEmpty(priorityText) && int.TryParse(priorityText, out priority))

{

if (priority > 2)

throw new TeamFoundationRequestFilterException("Priorities grater than 2 are not allowed.");

}

}

}

}

static XElement ReadUpdatePackageFromSoapEnvelope(string soapXml)

{

var soapDocument = XDocument.Parse(soapXml);

var updateName =

XName.Get(

"Update",

"http://schemas.microsoft.com/TeamFoundation/2005/06/WorkItemTracking/ClientServices/03");

var updateElement =

soapDocument.Descendants(updateName).FirstOrDefault();

return updateElement;

}

static string ReadHttpContextInputStream()

{

var httpContext = System.Web.HttpContext.Current;

string soapXml = null;

using (var memoryStream = new MemoryStream())

{

byte[] buffer = new byte[1024 * 4];

int count = 0;

while ((count = httpContext.Request.InputStream.Read(buffer, 0, buffer.Length)) > 0)

memoryStream.Write(buffer, 0, count);

memoryStream.Seek(0, SeekOrigin.Begin);

httpContext.Request.InputStream.Seek(0, SeekOrigin.Begin);

soapXml = Encoding.UTF8.GetString(memoryStream.GetBuffer());

}

return soapXml;

}

}

Try running this code when saving a Work Item with a priority field (by editing a single Work Item. If you edit the work item in a query view, the message passed to the server would be slightly different). You should see something similar to the following message in the client application:

The exception message is displayed in the dialog box (as a Technical information for administrator).

Download the sample code.