This article is a continuation of the series of asynchronous features included in the new Async CTP preview for next versions of C# and VB. Check out Part I for more information.

So, let’s continue with TPL Dataflow:

- Asynchronous functions

- TPL Dataflow

- Task based asynchronous Pattern

Part II: TPL Dataflow

Definition (by quote of Async CTP doc): “TPL Dataflow (TDF) is a new .NET library for building concurrent applications. It promotes actor/agent-oriented designs through primitives for in-process message passing, dataflow, and pipelining. TDF builds upon the APIs and scheduling infrastructure provided by the Task Parallel Library (TPL) in .NET 4, and integrates with the language support for asynchrony provided by C#, Visual Basic, and F#.”

This means: data manipulation processed asynchronously.

“TPL Dataflow is focused on providing building blocks for message passing and parallelizing CPU- and I/O-intensive applications”.

Data manipulation is another hot area when designing asynchronous and parallel applications: how do you sync data access in a parallel environment? how do you avoid concurrency issues? how do you notify when data is available? how do you control how much data is waiting to be consumed? etc.

Dataflow Blocks

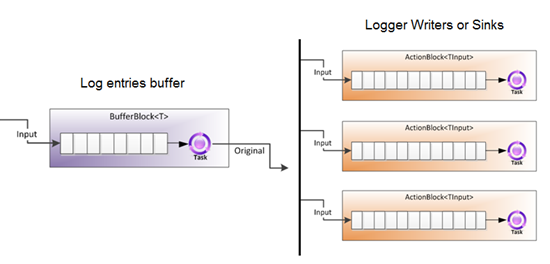

TDF provides data and action processing blocks. Imagine having preconfigured data processing pipelines to choose from, depending on the type of behavior you want. The most basic block is the BufferBlock<T>, which provides an storage for some kind of data (instances of <T>).

So, let’s review data processing blocks available. Blocks a categorized into three groups:

- Buffering Blocks

- Executor Blocks

- Joining Blocks

Think of them as electronic circuitry components :)..

1. BufferBlock<T>: it is a FIFO (First in First Out) queue. You can Post data to it and then Receive it synchronously or asynchronously. It synchronizes data consumption for only one receiver at a time (you can have many receivers but only one will actually process it).

2. BroadcastBlock<T>: same FIFO queue for messages (instances of <T>) but link the receiving event to all consumers (it makes the data available for consumption to N number of consumers). The developer can provide a function to make a copy of the data if necessary.

3. WriteOnceBlock<T>: it stores only one value and once it’s been set, it can never be replaced or overwritten again (immutable after being set). As with BroadcastBlock<T>, all consumers can obtain a copy of the value.

4. ActionBlock<TInput>: this executor block allows us to define an operation to be executed when posting data to the queue. Thus, we must pass in a delegate/lambda when creating the block. Posting data will result in an execution of the delegate for each data in the queue.

You could also specify how many parallel executions to allow (degree of parallelism).

5. TransformBlock<TInput, TOutput>: this is an executor block designed to transform each input, that is way it defines an output parameter. It ensures messages are processed and delivered in order.

6. TransformManyBlock<TInput, TOutput>: similar to TransformBlock but produces one or more outputs from each input.

7. BatchBlock<T>: combines N single items into one batch item (it buffers and batches inputs).

8. JoinBlock<T1, T2, …>: it generates tuples from all inputs (it aggregates inputs). Inputs could be of any type you want (T1, T2, etc.).

9. BatchJoinBlock<T1, T2, …>: aggregates tuples of collections. It generates collections for each type of input and then creates a tuple to contain each collection (Tuple<IList<T1>, IList<T2>>).

Next time I will show some examples of usage for each TDF block.

* Images taken from Microsoft’s Async CTP documentation.